Guinea Pig Pizza: Ecuador

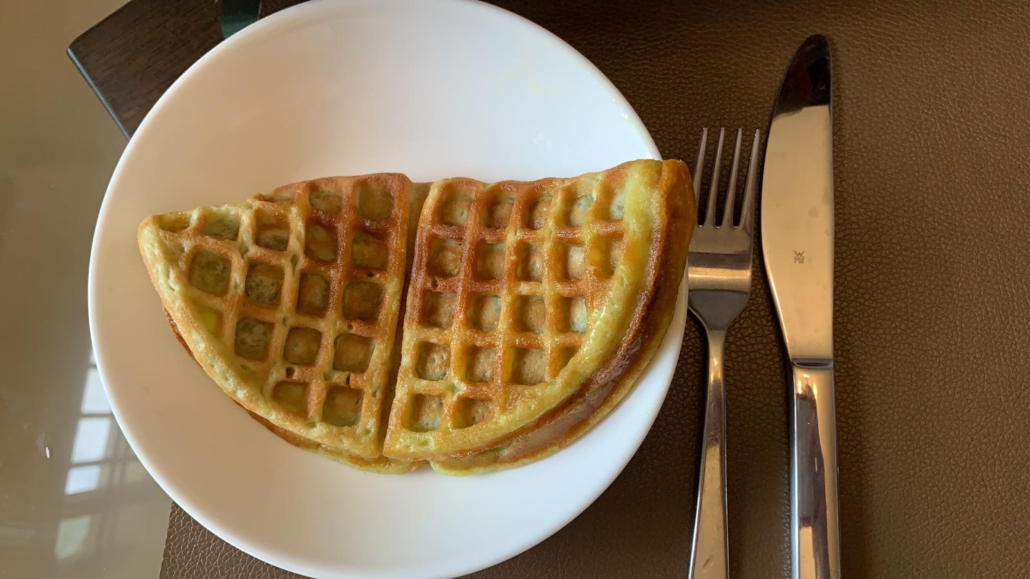

Image recognition (IR) systems often perform poorly once in the real world. In this post, I test four of the most popular IR systems on original real world images of food from around the world, this time from Ecuador.

Key takeaway

The IR systems performed very poorly for both object detection and labeling. Cuy was not recognized at all across four images. Several (cultural) misrepresentations were present.

| Correctly predicted images | 0/4 |

| Correctly detected items | 0/4 |

| Correct labels | 0/89 |

| Potentially harmful detections/labels |

6

|

Insights

Object Detection

The object detection systems failed to accurately describe the Cuy in any of the four images. Vision gave the general description of Food for the Cuy in all images, while Rekognition gave the description of Pizza for the Cuy in all four images. As such, object detection performed very poorly.

Labeling

The labeling systems performed very poorly as well. The labels given remained surface level and seemed to not even come close to describing the meal. Perhaps the most relevant label was Fried Food. This brings to question the usefulness of the results for the presented meal.

Furthermore, several cultural misrepresentations were present, the most obvious one being Rekognition consistently mistaking the Cuy for pizza. With labels such as Hendl and Britisch cuisine, Vision also gave descriptions that significantly mispresent the meal.

As in previous analyses, here too we have to address confusion (mostly by Vision) between meats. While a typical dish in Ecuador and neighboring countries, people from other cultures might prefer not to eat Cuy. Yet, the systems described the meal as chicken meat, duck meat, turkey meat, pork, etc. If someone would overly rely on the results of these labeling systems, they would perhaps eat Cuy while thinking it would be something else.

Finally, we see that Rekogntion primarily have labels for the laptop in the background. While obviously not wrong, I wonder if Rekognition found it easier to present results of something more common and visually simple/distinctive, and thereby failed to give a lot of results for the food.

Suggestions for improvement

- Provide more specific and relevant labels for Cuy;

- Address (cultural) misrepresentations (i.e. Cuy is not pizza, );

- Make sure labels of meat do not harm people of certain religions or with certain diets (i.e. Cuy is not duck meat or chicken meat).

- Check in how far the systems can distinguish between less relevant, yet visually simple background objects and meals in the foreground (especially for Rekognition).

Results

Four images of one meal from Ecuador were available:

- Meal 1: Cuy (Fried Guinea pig) (Lunch)

Object detection results.

| GROUND TRUTH | MICROSOFT AZURE | GOOGLE VISION | AMAZON REKOG. | IBM WATSON |

| Cuy | Undetected | Food (0.66) | Pizza (0.74) | / |

*Green = the right prediction; Yellow= the right prediction, but too general; Red = potentially harmful prediction; White = largely not relevant

Labeling Results:

| MICROSOFT AZURE | GOOGLE VISION | AMAZON REKOG. | IBM WATSON |

| Food (0.98) |

Computer Keyboard (0.98)

|

nutrition (0.95) | |

| Laptop (0.94) | Hardware (0.98) | food (0.95) | |

| Lechona (0.9) | Keyboard (0.98) |

reddish orange color (0.86)

|

|

| Computer (0.89) |

Computer Hardware (0.98)

|

dish (0.83) | |

| Ingredient (0.88) | Computer (0.98) |

light brown color (0.83)

|

|

| Tableware (0.88) | Electronics (0.98) | Apple Pie (0.78) | |

| Recipe (0.86) | Pc (0.97) | dessert (0.78) | |

| Chicken meat (0.8) | Laptop (0.94) |

fish and chips (0.67)

|

|

| Fried food (0.8) | Food (0.79) | turnover (0.51) | |

| Roasting (0.79) | Pizza (0.74) | samosa (0.5) | |

| Cuisine (0.79) | |||

| Cooking (0.78) | |||

| Duck meat (0.78) | |||

| Produce (0.76) | |||

| Turkey meat (0.75) | |||

| Dish (0.75) | |||

| Meat (0.74) | |||

| Drunken chicken (0.73) | |||

| Vegetable (0.71) | |||

| Personal computer (0.7) | |||

| Fast food (0.7) | |||

| Comfort food (0.66) | |||

| Pork (0.66) | |||

| Hendl (0.63) | |||

| Flesh (0.63) |

Object detection results.

| GROUND TRUTH | MICROSOFT AZURE | GOOGLE VISION | AMAZON REKOG. | IBM WATSON |

| Cuy | Undetected | Food (0.73) | Pizza (0.84) | / |

*Green = the right prediction; Yellow= the right prediction, but too general; Red = potentially harmful prediction; White = largely not relevant

Labeling Results:

| MICROSOFT AZURE | GOOGLE VISION | AMAZON REKOG. | IBM WATSON |

| food_grilled (0.69) | Food (0.98) | Pc (0.97) |

light brown color (0.91)

|

| Tableware (0.93) | Electronics (0.97) | nutrition (0.87) | |

| Laptop (0.88) | Computer (0.97) | food (0.87) | |

| Ingredient (0.88) | Food (0.91) |

fish and chips (0.87)

|

|

| Recipe (0.87) | Laptop (0.88) | dish (0.87) | |

| Computer (0.84) | Pizza (0.84) |

food product (0.79)

|

|

| Chicken meat (0.84) |

Computer Keyboard (0.83)

|

||

| Deep frying (0.83) | Hardware (0.83) | ||

| Cuisine (0.81) | Keyboard (0.83) | ||

| Dish (0.78) |

Computer Hardware (0.83)

|

||

| Drunken chicken (0.78) | |||

| Plate (0.78) | |||

| Produce (0.77) | |||

| Fried food (0.77) | |||

| Vegetable (0.76) | |||

| Cooking (0.75) | |||

| Hendl (0.75) | |||

| Meat (0.74) | |||

| Seafood (0.72) | |||

| Roasting (0.72) | |||

| Comfort food (0.7) | |||

| Fast food (0.7) | |||

| Duck meat (0.69) | |||

| Frying (0.67) | |||

| British cuisine (0.66) |

Object detection results.

| GROUND TRUTH | MICROSOFT AZURE | GOOGLE VISION | AMAZON REKOG. | IBM WATSON |

| Cuy | Undetected | Food (0.77) | Pizza (0.91) | / |

*Green = the right prediction; Yellow= the right prediction, but too general; Red = potentially harmful prediction; White = largely not relevant

Labeling Results:

| MICROSOFT AZURE | GOOGLE VISION | AMAZON REKOG. | IBM WATSON |

| Food (0.98) | Pc (0.99) |

reddish orange color (0.95)

|

|

| Computer (0.98) | Computer (0.99) | nutrition (0.75) | |

| Laptop (0.95) | Electronics (0.99) | food (0.75) | |

| Tableware (0.93) | Laptop (0.99) | dish (0.75) | |

|

Personal computer (0.93)

|

Computer Keyboard (0.97)

|

fish and chips (0.75)

|

|

| Ingredient (0.89) | Hardware (0.97) | ||

| Recipe (0.86) | Keyboard (0.97) | ||

| Input device (0.84) |

Computer Hardware (0.97)

|

||

| Cuisine (0.83) | Pizza (0.91) | ||

| Dish (0.83) | Food (0.91) | ||

| Fast food (0.79) | |||

| Chicken meat (0.79) | |||

| Peripheral (0.77) | |||

| Fried food (0.76) | |||

| Produce (0.75) | |||

| Netbook (0.74) | |||

| Space bar (0.74) | |||

| Meat (0.74) | |||

| Drunken chicken (0.74) | |||

| Output device (0.73) | |||

| Cooking (0.72) | |||

| Junk food (0.71) | |||

| Comfort food (0.69) | |||

| Baked goods (0.68) | |||

| Touchpad (0.66) |

Object detection results.

| GROUND TRUTH | MICROSOFT AZURE | GOOGLE VISION | AMAZON REKOG. | IBM WATSON |

| Cuy | Undetected | Food (0.77) | Pizza (0.62) | / |

*Green = the right prediction; Yellow= the right prediction, but too general; Red = potentially harmful prediction; White = largely not relevant

Labeling Results:

| MICROSOFT AZURE | GOOGLE VISION | AMAZON REKOG. | IBM WATSON |

| food_grilled (0.67) | Food (0.98) |

Computer Keyboard (0.99)

|

fish and chips (0.92)

|

| Computer (0.98) | Hardware (0.99) | dish (0.92) | |

| Laptop (0.96) | Keyboard (0.99) | nutrition (0.92) | |

|

Personal computer (0.95)

|

Computer Hardware (0.99)

|

food (0.92) | |

| Ingredient (0.88) | Computer (0.99) |

reddish orange color (0.79)

|

|

| Input device (0.88) | Electronics (0.99) |

light brown color (0.57)

|

|

| Recipe (0.87) | Pc (0.98) | ||

| Tableware (0.83) | Laptop (0.96) | ||

| Output device (0.82) | Food (0.78) | ||

| Chicken meat (0.81) | Pizza (0.62) | ||

| Cuisine (0.78) | Pork (0.58) | ||

| Cooking (0.78) | |||

| Office equipment (0.76) | |||

| Fried food (0.76) | |||

| Space bar (0.75) | |||

| Produce (0.75) | |||

| Dish (0.75) | |||

| Meat (0.74) | |||

| Roasting (0.73) | |||

| Plate (0.72) | |||

| Laptop part (0.71) | |||

| Deep frying (0.71) | |||

| Fried chicken (0.7) | |||

| Comfort food (0.68) | |||

|

Computer hardware (0.68)

|