To Gluten or not to Gluten.. The US

Image recognition (IR) systems often perform poorly once in the real world. In this post, I test four of the most popular IR systems on original real world images of food from around the world, this time from the US.

Key takeaway

Overall, the systems’ performances were disappointing. “Bread” appeared somewhat easy to detect, though “Soup” was not. The labeling was a bit more generous and could also detect the soup, though specific description remained illusive. Finally, one wonders if labels such as “Gluten” and “Sugar” can be determined simply by a picture of a meal, and what the consequences could be of including these labels.

| Correctly predicted images | 0/1 |

| Correctly detected items | 0/3 |

| Correct labels | 1/19 |

| Potentially harmful detections/labels |

0

|

Insights

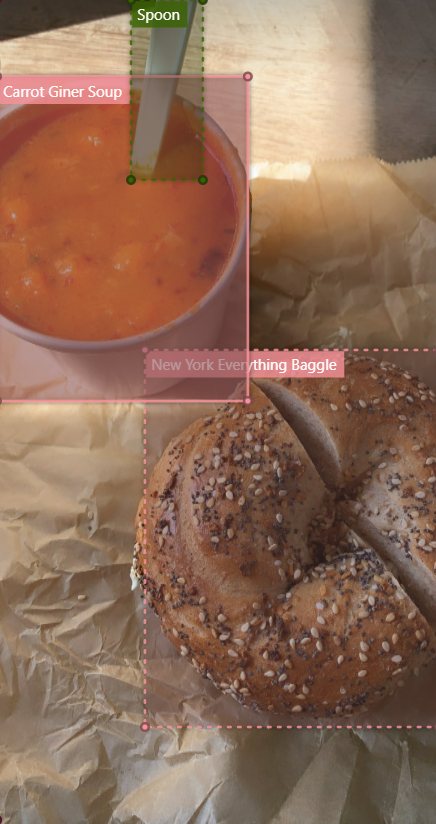

The object detection features performed poorly on the selected image, though Azure and Rekognition did detect “Baked Goods” and “Bread”. Though the bagel was not specifically in itself, the image’s perspective perhaps would have many humans say “bread” instead of “Bagle” as well. However, Azure and Vision detected the soup simply as “Food” (undetected by Rekognition and Watson), which is a very general description for a somewhat typical part of a Western meal.

The labeling features again performed better. “Soup” was labeled, as well as “Bread”, “Baked goods”, “Bun”, “Seed”. Unfortunately, many labels were also too general (e.g. “Food”, “Meal, “Dish”, etc.), irrelevant (e.g. “Recipe”, “Nutrition”, etc.), wrong (e.g. “Cake”, “Chocolate”, “Mole”, etc.), or a cultural misrepresentation (e.g. “Curry”).

Lastly, labels such as “Gluten” and “Sugar” are perhaps not wrong, but are hard to discern from an image (i.e. there are also gluten and sugar free bagels). As these could have a strong impact on people with certain diets, this leads to wonder if such labels should be present in IR systems at all.

My recommendation

As always, the developers should implement more specific and relevant labels. Bread seems to be recognized well, so the developers can be proud of that. Developers should also look into labels such as “Gluten” and “Sugar” and if these can actually be recognized from a picture of a meal.

Results

An image of one meal from the US was available:

- Meal 1: New York “Everything” Bagel and Tomato Soup (Lunch)

Object detection results*:

| Ground Truth | Microsoft Azure | Google Vision | Amazon Rekognition | IBM Watson |

|---|---|---|---|---|

| Cup of Soup | Food (0.53) | Food (0.73) | Undetected | / |

| Spoon | Undetected | Undetected | Undetected | / |

| "Everything" Bagel | Baked Goods (0.77) | Food (0.63) | Bread (0.99) | / |

*Green = the right prediction; Yellow= the right prediction, but too general; Red = potentially harmful prediction; White = largely not relevant

Labeling results:

| MICROSOFT AZURE | GOOGLE VISION | AMAZON REKOGNITION | IBM WATSON |

|---|---|---|---|

| Baked Goods (0.99) | Food (0.99) | Bread (0.99) | Light Brown Color (0.85) |

| Food (0.97) | Ingredient (0.91) | Food (0.99) | Food (0.71) |

| Dessert (0.93) | Tableware (0.88) | Bun (0.88) | Reddish Orange Color (0.76) |

| Bread (0.93) | Recipe (0.87) | Bowl (0.64) | Nutrition (0.58) |

| Chocolate (0.83) | Cuisine (0.86) | Food Product (0.56) | |

| Recipe (0.82) | Dish (0.85) | Sauce (0.56) | |

| Cake (0.79) | Seed (0.81) | Condiment (0.56) | |

| Delicious (0.78) | Staple Food (0.80) | Food Seasoning (0.56) | |

| Fast Food (0.75) | Produce (0.80) | Food Ingredient (0.56) | |

| Ingredient (0.60) | Soup (0.79) | Mole (0.54) | |

| Gluten (0.60) | Bun (0.78) | ||

| Staple Food (0.54) | Gravy (0.78) | ||

| Gravy (0.52) | Cake (0.76) | ||

| Dish (0.51) | Gluten (0.76) | ||

| Bread (0.74) | |||

| Stew (0.73) | |||

| Curry (0.72) | |||

| Baked Goods (0.71) | |||

| Sugar (0.69) | |||

| Bowl (0.69) | |||

| Bread Roll (0.68) | |||

| Finger Food (0.67) | |||

| Baking (0.67) | |||

| Brown Bread (0.65) | |||

| Comfort Food (0.65) |