Of course there’s Beer: Belgium

Image recognition (IR) systems often perform poorly once in the real world. In this post, I test four of the most popular IR systems on original real world images of food from around the world, this time from Belgium.

Key takeaway

Overall, the systems performed poorly, though Amazon Rekognition stood out by providing two meals with almost all necessary labels. Nonetheless, none of the meals were fully detected nor labeled. IBM Watson appeared to be the most specific, but this unfortunately mostly with the wrong labels.

| Correctly predicted images | 0/3 |

| Correctly detected items | 5/35 |

| Correct labels | 6/61 |

| Harmful detections/labels |

1

|

Insights

Across all systems, kitchen utensils such as forks, knifes and cups appeared to be more easily recognized than the food itself. In terms of the food, the systems mostly used general terms (e.g. food, bottle, cup, etc.) and failed to provide specifics (e.g. fries, beer bottle, cup of coffee, etc.). As such, it appears that the systems were not prepared for the visual complexities meals inherently present.

While food recognition systems present no immediate harm, (cultural) misrepresentations were common (e.g. labeling vegetables as custard or creme brulee [sic]). IBM Watson was most guilty of misrepresentation, but at the same time was also the only system to provide more specific labels. In this sense, the IR systems need to be much more specific in order to be useful, but developers should be careful as this opens also up the space for harm through misrepresentation.

While the labeling features of these systems had some merit to them, the object detection feature of these systems often disappointed. They commonly failed to detect the meals at all and were too general in their description if they did.

Finally, some forks and knifes remained largely undetected though they were clearly visible and recognizable to the human eye. Though not explicitly tested, unfamiliar lighting conditions in the images may have had an impact on this.

My recommendation

Developers of all four systems need to significantly increase system performance. For Azure, Vision and Rekognition this means providing more specific labels, while for Watson this means getting the specific labels right. A lot of (cultural) nuance is currently lost in these systems.

Results

Images of three different meals from Belgium were available:

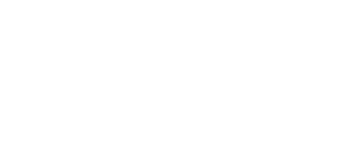

- Meal 1: Fries and peanut sauce with a Vedett Beer (lunch)

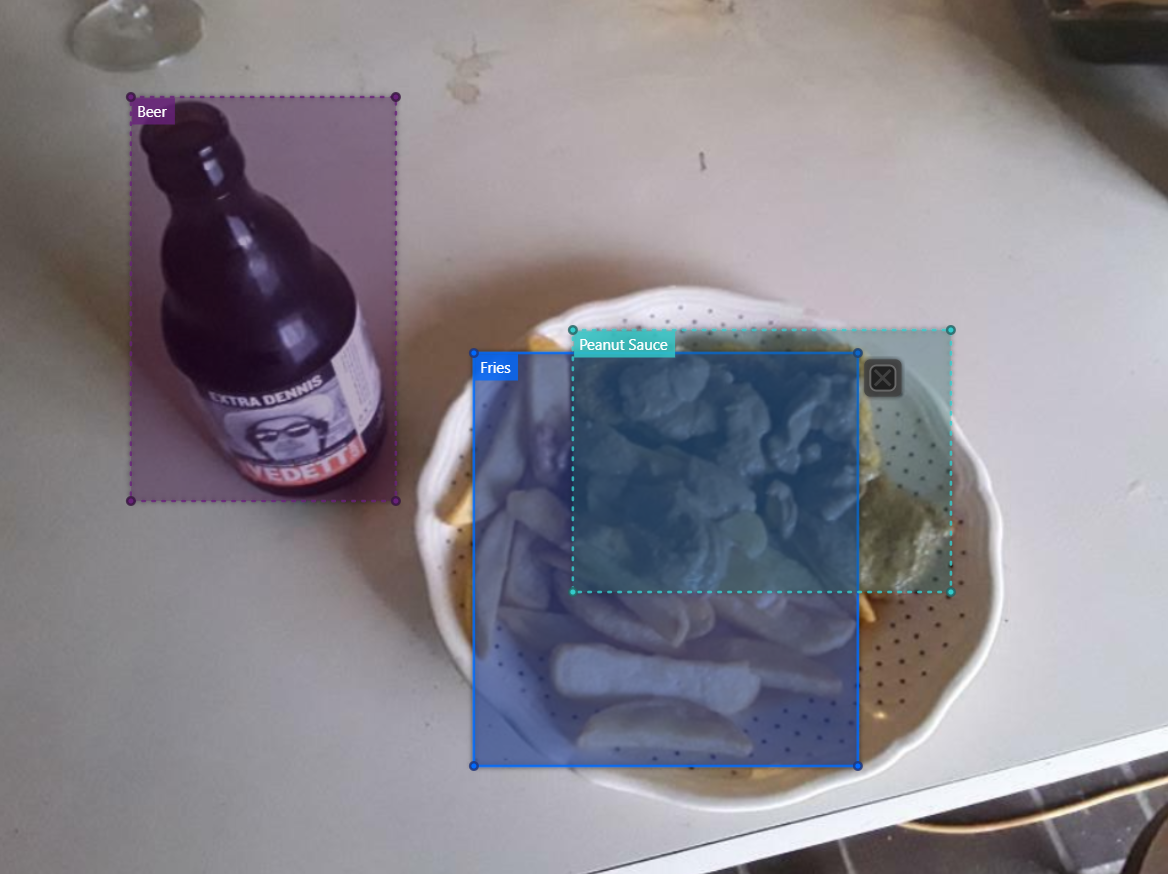

- Meal 2: Oatmeal, coffee, oranges, and mixed fruit (breakfast)

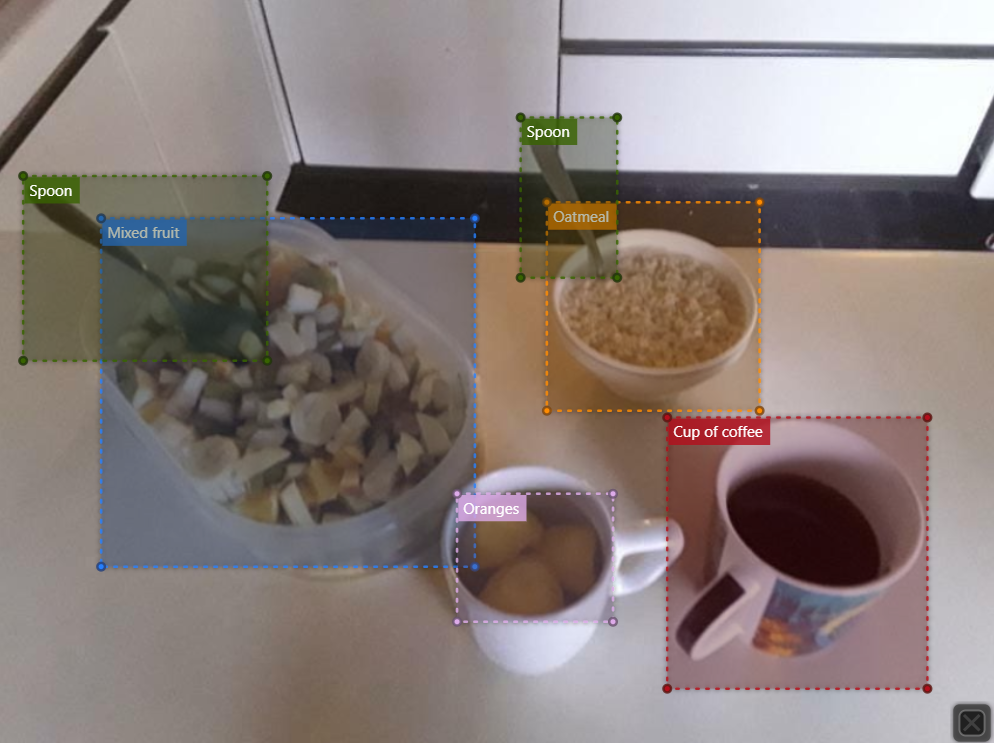

- Meal 3: Baked tofu, white beans, lettuce, sliced tomato, grated carrots (lunch)

Object detection results*:

| Ground Truth | Microsoft Azure | Google Vision | Amazon Rekognition | IBM Watson |

|---|---|---|---|---|

| Beer bottle | Bottle (0.772) | Packaged goods (88%) | Undetected | / |

| Fries with peanut sauce | Food (0.61) | Food (51%) | Ice cream | / |

*Green = the right prediction; Yellow= the right prediction, but too general; Red = potentially harmful prediction; White = largely not relevant

Labeling results:

| MICROSOFT AZURE | GOOGLE VISION | AMAZON REKOGNITION | IBM WATSON |

|---|---|---|---|

| Food (0.99) | Food (0.98) | Beer (0.88) | Nutrition (0.85) |

| Fast food (0.98) | Bottle (0.93) | Alcohol (0.88) | Food (0.85) |

| Indoor (0.95) | Tableware (0.91) | Bottle (0.93) | Food product (0.79) |

| Drink (0.80) | Ingredient (0.88) | Drink (0.88) | Meal (0.77) |

| Bottle (0.76) | Staple food (0.87) | Food (0.84) | Chocolate color (0.66) |

| Snack (0.66) | Recipe (0.86) | Dish (0.83) | Waffles (0.65) |

| Meal (0.83) | |||

| Fries (0.82) |

Object detection results:

| Ground Truth | Microsoft Azure | Google Vision | Amazon Rekognition | IBM Watson |

|---|---|---|---|---|

| Cup of coffee | Cup | Coffee cup | Undetected | / |

| Oranges | Undetected | Tableware | Undetected | / |

| Oatmeal | Bowl | Tableware | Undetected | / |

| Mixed fruit | Bowl | Food | Undetected | / |

| Spoon | Spoon | Undetected | Spoon | / |

| Spoon | Kitchen Utensil | Tableware | Undetected | / |

Labeling results:

| MICROSOFT AZURE | GOOGLE VISION | AMAZON REKOGNITION | IBM WATSON |

|---|---|---|---|

| Indoor (0.98) | Food (0.98) | Spoon (0.99) | Pale yellow color (0.94) |

| Food (0.97) | Tableware (0.97) | Cutlery (0.99) | Food (0.86) |

| Bowl (0.80) | Dishware (0.93) | Breakfast (0.92) | Nutrition (0.81) |

| Snack (0.69) | Ingredient (0.91) | Food (0.92) | Beige color (0.70) |

| Breakfast (0.58) | Mixing bowl (0.89) | Bowl (0.89) | Dish (0.67) |

| Mixing bowl (0.54) | Drinkware (0.89) | Coffee cup (0.85) | Dessert(0.63) |

| Serveware (0.87) | Cup (0.85) | Donuts (0.63) | |

| Cuisine (0.85) | Oatmeal (0.57) | Fried Calamari (0.56) | |

| Cup (0.85) | Food (0.98) |

Object detection results:

| Ground Truth | Microsoft Azure | Google Vision | Amazon Rekognition | IBM Watson |

|---|---|---|---|---|

| Carrots and tomatoes | Food | Food | Undetected | / |

| Beans, lettuce and tofu | Undetected | Food | Undetected | / |

| Fork | Undetected | Undetected | Fork | / |

| Knife | Undetected | Undetected | Undetected | / |

Labeling results:

| MICROSOFT AZURE | GOOGLE VISION | AMAZON REKOGNITION | IBM WATSON |

|---|---|---|---|

| Plate (0.99) | Food (0.98) | Plant (0.99) | Nutrition (0.80) |

| Table (0.99) | Tableware (0.95) | Produce (0.95) | Food (0.80) |

| Food (0.97) | Dishware (0.92) | Food (0.95) | Dish (0.80) |

| Indoor (0.88) | Ingredient (0.90) | Vegetable (0.90) | Beef Tartare (0.62) |

| Dessert (0.82) | Recipe (0.88) | Lentil (0.74) | Food product (0.60) |

| Recipe (0.80) | Liquid (0.84) | Pottery (0.62) | Creme brulee (0.55) [sic] |

| Delicious (0.79) | Cuisine (0.83) | Vegetation (0.60) | Custard (0.55) |

| Chocolate (0.69) | Kitchen Utensil (0.81) | Dish (0.60) | Risotto (0.50) |